Explainability and Interpretability : The Path to Trustworthy Systems

How Explainable AI (xAI) and Interpretable Machine Learning (IML) Ensure Responsible AI Development

AI is revolutionizing diverse sectors, from finance, detecting patterns to prevent fraud, to healthcare, helping disease diagnosis with remarkable accuracy, and the justice system, assisting in parole decisions. As AI rapidly advances, the opaqueness of "black box" models intensifies the need for transparency [5]. Explainability and interpretability are crucial as they provide insights into AI's decision-making processes, ensuring these systems are accountable, fair, and trustworthy.

What are Explainable AI (xAI) and Interpretable ML (IML)?

Explainable AI (xAI) focuses on making AI decisions understandable for human users, while Interpretable Machine Learning (IML) delves into understanding the internal mechanisms of AI systems. It is crucial for building trust and fostering transparency in AI applications across various sectors. For example, in hiring algorithms, xAI can help demonstrate that job candidate recommendations are free from gender or racial bias, ensuring fairness and compliance with equal opportunity laws.

Overview of various xAI and IML techniques and how they work:

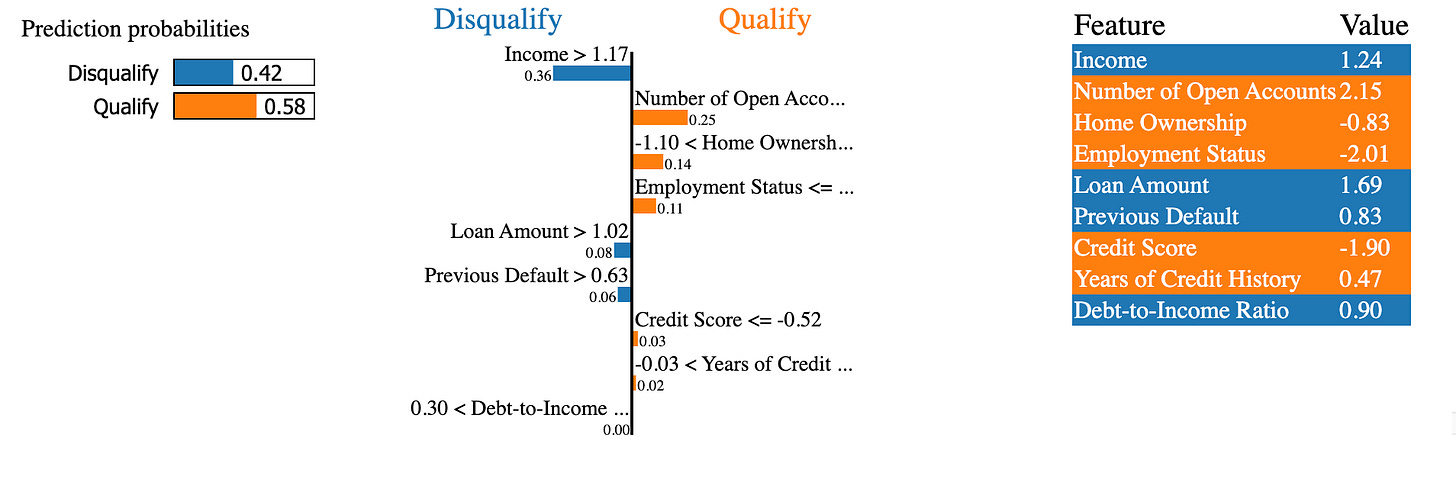

Feature Importance (e.g., LIME [1], SHAP [2]): These techniques quantify the impact of each feature in the input data on the model's prediction. For example, SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) can show which variables most influenced a loan approval decision.

Model-specific methods (e.g., attention mechanisms in neural networks): These are methods tailored to specific types of models. Attention mechanisms in neural networks, for instance, highlight parts of data (e.g., words in a sentence) that are crucial for the model’s decision, helping to trace the model's focus. The OpenAI Microscope [3] is another example, offering visualizations of every significant layer and neuron of 13 important vision models, providing insights into how these models process visual information. The “Feature Visualization” paper by Google [4] discusses how feature visualization techniques help interpret neural networks.

Model-agnostic methods: These methods can be applied to any machine learning model without needing to understand its inner workings (black-box). They generally involve manipulating input data and observing how changes affect outputs to infer how the model behaves.

The pros and cons of different approaches:

Feature Importance methods:

Pros include providing clear, quantifiable insights into specific features' impact, making them highly informative for decision-making.

The cons are that they can sometimes oversimplify the contributions of features in complex models.

Model-specific methods:

Pros are that they can offer deep insights tailored to specific model architectures, enhancing model transparency.

However, their cons include being limited to certain model types and potentially requiring more computational resources.

Model-agnostic methods:

The main advantage is their versatility, as they can be used across different models.

The downside is that they might not provide as deep insights into the model's internal mechanics as model-specific methods do.

Challenges in implementing explainability and interpretability:

Computational cost: Implementing xAI and IML can be resource-intensive, especially with complex models requiring extensive computations to generate explanations.

Potential inaccuracies: There's a risk that the simplifications used to make the model's decisions understandable could lead to inaccuracies in the explanations provided, which might not fully represent the model’s actual workings.

Limitations of current xAI and IML methods and potential risks:

Misleading interpretations: Current xAI methods might produce explanations that are easy to understand but oversimplify or misrepresent how decisions are made. For example, an explanation could imply a causal relationship where there is none, leading to incorrect assumptions about the model's decision-making process.

Incompleteness: Many xAI and IML techniques do not capture the entirety of complex model behaviors, potentially leading to gaps in understanding critical aspects of the AI’s function.

While we rapidly advance toward building Artificial General Intelligence (AGI) and integrating AI into various institutions, it is crucial to invest in and develop Explainable AI (xAI) and Interpretable Machine Learning (IML) techniques. These efforts ensure that AI systems remain transparent and trustworthy.

In upcoming posts, I will dive deep into the technical details, explore various xAI and IML methods, and explain how they work.

References

[1] Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "Why should I trust you?" Explaining the predictions of any classifier." Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. 2016.

[2] Lundberg, S. M., & Lee, S. I. (2017). A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems (NIPS).

[3] OpenAI Microscope Available here: https://microscope.openai.com/

[4] Olah, et al., "Feature Visualization", Distill, 2017., Available here: https://distill.pub/2017/feature-visualization/

[5] “A ‘black box’ AI system has been influencing criminal justice decisions for over two decades – it’s time to open it up”, Available here: https://theconversation.com/a-black-box-ai-system-has-been-influencing-criminal-justice-decisions-for-over-two-decades-its-time-to-open-it-up-200594